|

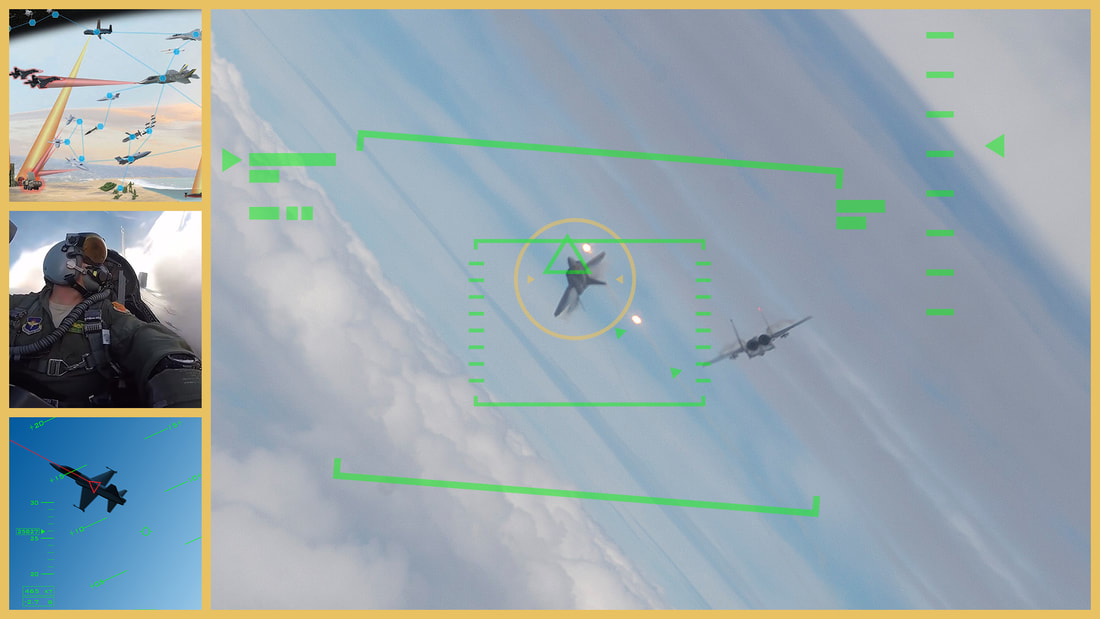

By Catherine Rodrigues ’23 (Images Credit: Courtesy of the author) The notion of using artificial intelligence (AI) for fighter pilots provokes a discussion of the pro and cons and everything between for military incorporation and the potential trading out of humans for technology. But what happens when professionals put this idea to the test? Back in mid-August, companies Aurora Flight Sciences, EpiSci, Georgia Tech Research Institute, Heron Systems, Lockheed Martin, Perspecta Labs, physicsAI and SoarTech all participated in the Defense Advanced Research Projects Agency’s (DARPA) AlphaDogfight Trials, to test which company’s AI “pilot” would come out on top. They competed at Johns Hopkins University Applied Physics Laboratory to gain one of the four top spots to face the F-16 pilot in a simulator. On Aug. 20, the AI algorithm created by Heron Systems beat a pilot titled “Banger” who recently completed Air Force Weapons School’s F-16 instructor course and is a member of the District of Columbia Air National Guard, 5-0 in a face-off given five different, yet realistic scenarios. Col. Daniel Javorsek, the program manager of DARPA’s Air Combat Evolution program, which includes the AlphaDogfight, said the event’s goal was to increase the confidence of the feasibility of using artificial intelligence in combat aircraft. If the event was able to convince just a couple of pilots, said Javorsek, “then I’m considering it a success. That’s the first steps I need to create a trust in these sorts of agents.” Also, Javorsek mentioned that even if today’s DARPA had a perfectly prepared system, it would take approximately 10 years to put an AI in a combat fighter jet similar to those tested with a F-15 or F-16. Although “Banger” had more than 2,000 hours in the F-16, this algorithm had a few advantages over him. For example, the AI did not follow the Air Force’s basic maneuver performances, including not passing 500 feet when firing. It also can compute making adjustments in approximately a nanosecond, whereas humans observe and carefully think about their next move. This proposes the question: Do AI fighter pilots have the ability to make moral and ethical decisions in the air? Based on DARPA’s extensive research, they aren’t sure. AI does have the capability to hold a strong front and hopefully soon be able to act as autopilots. In continuation of moral and ethical decisions, on the third day that the USS Indianapolis had been sunk by Japanese torpedoes, Lt. Wilbur Gwinn, a seaplane pilot, was flying routine patrol over the Pacific Ocean near the Philippines and was trying out this new antenna. The antenna continuously was going in and out of signal, so one time, when he went back to fix it, he saw an oil slick, which indicated that there was a submarine nearby in which he and his crew were planning on attacking. However, as they got closer, he described what he saw as “cucumber bumps on the oil slick,” and he soon realized that those bumps were men in the water. He and the crew were confused because there were no reports on the intercom about an SOS from enemy or ally ships (later it was found the ship had lost its signal). He continued to fly in and call on the radio “ducks on the pond” when he began to see hundreds of men stranded in the water with sharks surrounding and attacking them.

One of the seaplanes that get flown in was piloted by Adrian Marks, who shared the same belief as the first seaplane pilot who called in the sinking, that rescue ships would have to carry the men. Marks was not allowed to dive into and land near the site because his plane was meant for a smooth water landing, and there were 12-foot swells. As he stared into this terrifying situation, he faced a complicated internal conflict based upon ethics — does he break the rules to help save these men? Is this the proper decision for him to make? It may not be safe, but if this risk can save lives, is it worth it? He ended up deciding yes, aimed his plane into the water, and rescued 53 people. Marks’ seaplane, a PBY Catalina, could not hold all the men, so he strapped the remaining people onto a 1,500 square foot wing with parachute wire, saving those lives, most of which would have likely not survived. In a radical yet significant case like this, the question can be asked, would an AI fighter pilot be able to compute and make that conscious decision as Marks did? Could the smartest and best algorithm AI fighter pilot system come to an intelligent decision and solution on the spot while taking in and acknowledging the risk? Risk plays its course and the military will continue to as well, while continuously testing new technology to better strengthen, and futuristically, yet diligently, improve itself with modern technology. Sources Air Force Magazine and “Leadership Under Fire: Optimizing Human Performance” podcast “What the USS Indianapolis Tells Us About Resilience with Sara Vladic and Lynn Vincent” Comments are closed.

|

Photos from Verde River, Manu_H, focusonmore.com, Brett Spangler, Cloud Income